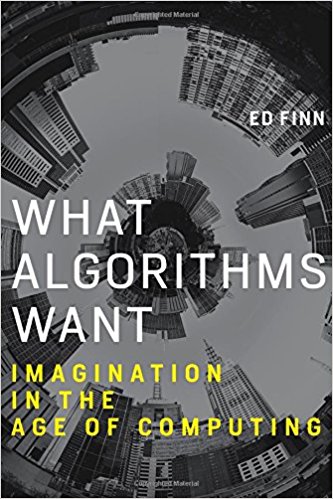

Let’s start with the title of your book: What Algorithms Want. Can we talk about algorithms having intention or agency?

For the most part, no. We spend a tremendous amount of energy inventing or imagining this kind of agency for algorithms, like when we go out of our way to learn the setup lines so Siri can tell a half-funny joke. But the myth of the omniscient, objective algorithm is so powerful that we often perceive agency in these systems. It’s tempting to treat these systems like Magic Eight balls, oracles of wisdom whose every utterance has some kind of special meaning. There is a story that this happened to Gary Kasparov in his first game against IBM’s Deep Blue in 1997. the computer made a very strange move that convinced Kasparov he was contending with a profoundly deep intelligence, and it threw him off his game, leading him to lose his second game and ultimately the whole match. As it turned out, the move was a bug, quietly corrected by IBM engineers after the first game.

Having said all of that, I think there are a few glimpses of real intention emerging from algorithmic systems. Consider Google’s DeepMind, which cut its teeth playing classic Atari games. Watching the system learn to play Brick Breaker raises the hair on the back of your neck. If we measure intention or autonomous agency by how often we are surprised by algorithms…I think they’re going to surprise us more and more.

Lots of people don’t understand much about algorithms at all. Does this matter?

Yes, because they understand more about us every day. We need to understand enough about algorithms to meet the standard of what my research colleagues call “informed consent.” If you don’t comprehend, at some basic level, how a platform like Facebook or Google interacts with you and your information, you are not really a user of that system. You’re just a product being marketed to others. In the book I frame this as a sort of literacy: a basic capacity to “read” how algorithms interact with human culture. Thinking of these systems as something more than code - as tangles of software, policies, people, assumptions, physical hardware, and so on - is very important as we continue to navigate collaborative relationships with algorithms that tell us what to read, where to go, who to date, and much more.

Algorithms are building up vast banks of data and a drive for more computation. What’s the effect of this?

There are two major effects that are visible now, and I suspect there are more to come. The first is that the apparatus for gathering all this information is creating a thickening layer of computation around the planet. If every car, camera, and toothbrush, is sensing and reporting information about the physical world, we are changing reality and human behaviour in important ways. These systems have complex interdependencies, so the failure of something like Amazon Web Services or the Global Positioning System would have cascading effects on many other tools and platforms. More importantly, the experience of reality is changing. I’ve seen my kids poking at paper magazine pages, disappointed that they are not interactive screens. Many people today have only the fuzziest grasp of the geographies of their own cities because they are so dependent on algorithmic wayfinding. Computation is making many new things visible, but it also blurs and obscures.

The second effect is that as these massive archives multiply, their true depth and power is becoming increasingly illegible to humans. Complex algorithms assess billions of data point across thousands of dimensions. Human minds max out in the single digits, for the most part. So we are dependent on algorithms to gather, manage, and evaluate all of this data. We can only skim the surface of this ocean of computation through analogies, abstractions and other simplifications that by definition discard the nuances at play.

How does this drive towards computation intersect with human culture?

The surface-level effects are already coming into focus. Some actuaries in Japan lost their jobs to an installation of IBM’s Watson. Automated systems are writing news articles, composing music, providing medical advice and of course driving cars at levels of expertise rapidly approaching and at times exceeding human expertise.

The deeper effects are much harder to articulate. What happens to human imagination when it is channelled and inflected through a platform like Google, which is explicitly designed to help us on our “quest for knowledge”? Social media has already created a rapidly shifting kind of hive consciousness, where memes and fragments of information circulate and change without ever seeming to solidify into fixed ideas. In the book I write about the ways in which culture is becoming more process rather than output oriented. It’s no longer about the individual work of art or criticism, but the ongoing stream or performance of that art. As we do more of our thinking through computation, this notion of process and persistent chains of interaction, of iteration, will become more important, from scholarly work showing every revision and peer review to works of music published as individual tracks and layers of sound.

You argue that algorithms should be understood in the context of philosophical traditions like consciousness. Could you expand?

One of the threads that informs our cultural reactions to algorithms today is the notion of the mind as a symbolic system. René Descartes and Willhelm von Leibniz were among the philosophers who argued for a deep link between language, consciousness and the construction of reality. The emergence of computation has offered a new way to frame this metaphor: language as a kind of operating system for the human mind. I start the book with a quote from Neal Stephenson’s novel Snow-Crash, which goes one step farther in imagining a universal operating system for the mind that can be hacked and reprogrammed.

The connections between computation and the mind are only getting more attention as machine learning experts continue to refine “neural networks” of simulated, simplified brain-like structures to solve particular challenges at the forefront of AI. Perhaps this is why we have been so long obsessed with recognising “a mind at work” in our computational systems. Alan Turing’s famous paper laying out the Turing Test also suggests in the next breath that such a test is absurd. In fact, Turing argues, if we do develop intelligent machine we need to think of them in some sense as children and consider a parental relationship rather than expecting to recognise a fully-formed conscious being springing from the brow of homo sapiens.

Early in the book, you talk about the cathedral as a metaphor for computation. What is useful/flawed about that metaphor?

The cathedral metaphor is quite common in conversations about software, not least because of Eric Raymond’s The Cathedral and the Bazaar, but my favourite formulation is a joke from a computer conference in the 1980s: software and cathedrals are much the same: first we build them, and then we pray. There is some deep truth to this, because it plays on the twin roles of the cathedral. On the one hand, it is a complex physical structure, a physical representation of an abstraction, a demonstration of wealth, power, faith and perseverance. But on the other hand the cathedral is a structure where many of the most important bits are invisible, hidden, unspoken. Software works like this too—we love the spectacle of the visible structure, its regularity and imposing façade. But in the end its all the unspoken, invisible, symbolic work happening around the edges of the visible interface that are truly important.

The flaws with the metaphor have to do with the fixity of cathedrals in our imaginations. Once you build it, it’s done (or at least changes usually take a very long time). But our contemporary software cathedrals are constantly evolving, not just in general but in very specific ways for each of us. Netflix talks about how they customize every aspect of the user experience for each individual user. We need to recognize that many of the most sophisticated computational platforms we use today are observing us while we observe them, adapting their behaviour while we are using them.

What is the relationship between rationalism and computation/algorithms?

I see two ways to think about this. The first is that computation is a form of implemented rationalism: a deliberate effort to create self-contained universes of code governed by reason, logic, mathematical laws, perfect and predictable causality. The algorithm has its contemporary roots in the notion of effective computability, a space of mathematics where problems can be reliably solved in a finite span of time. The proofs of effective calculability emerged out of an effort to establish a rationalist floor to mathematics, to prove that the foundations of arithmetic and symbolic logic were consistent. The proofs provided by Alan Turing, Alonzo Church and others were quite dismaying to mathematicians, because the space of “effective calculability” they identified seemed so small in comparison to the vast and weird space of maths. But as time has gone on, we have continuously expanded this arena to incorporate more and more cultural life, from driving cars to computer-assisted vision.

This brings us to the second idea, the notion of computationalism. People like Stephen Wolfram speculate that the entire universe may be computational, and that every complex system will operate according to the same fundamental computational laws. This is the Isaac Asimov Foundation view of the universe, that given the right tools, everything is calculable, including human history.

And finally – should we be worried about an Orwellian future in which we are controlled by machines?

We’re controlled by machines every day. You may remember the trope of the alien who arrives on Earth only to decide that the highest form of life on the planet is the automobile. Today that alien would probably decide that smartphones are the masters of our society, telling us where to go and what to think about. The trick is to know what you’re signing up for - to know what choices are left off the menu, just as Orwell’s characters struggle to do in 1984. I think the Terminator future is fairly unlikely, but it will become increasingly important to mind the gap between our idealistic notions of computation is and the real effects it has in the world.